KaaS Architecture

Rackspace Kubernetes-as-a-Service

Last updated: Feb 11, 2022

Release: v6.0.2

This section is intended for anyone who wants to learn more about the Rackspace KaaS architecture and design. This section provides an overview of the main components and services, such as kaasctl, the KaaS control panel, OAuth Proxy, and so on.

- Preface

- Cloud providers

- Managed Services

- Clusters

- Tooling

- Authentication and authorization

- Document history and additional information

- Disclaimer

Preface

Rackspace offers 24x7x365 support for Rackspace Kubernetes-as-a-Service (KaaS). To learn about support for your cloud or to take advantage of our training offerings, open a support ticket or contact your Account Manager.

Cloud providers

KaaS supports multiple cloud providers. Although most of the current deployments integrate with OpenStack-based environments, KaaS can extend its support to other clouds and provide the same set of managed services.

The list of supported cloud providers includes the following platforms:

- OpenStack®

- Red Hat® OpenStack Platform

- Amazon® Elastic Kubernetes Service (Amazon EKS)

During the deployment, kaasctl provisions a Kubernetes cluster and Rackspace KaaS managed services on top of the supported cloud provider platform. The provisioned version of Kubernetes is conformant with upstream Kubernetes and supports all standard tools and plug-ins. KaaS deploys a version that is specified in the release notes. The KaaS team incorporates patches, updates, and Common Vulnerabilities and Exposures (CVE™) fixes regularly.

KaaS ensures secure communication between Kubernetes worker nodes and the underlying control plane for all cloud providers.

Learn more about the supported cloud providers in the following sections:

Amazon EKS overview

Amazon® Elastic Kubernetes Service (EKS) enables you to run containerized applications by using Kubernetes that runs on top of Amazon Virtual Private Cloud (VPC). KaaS integrates with Amazon APIs to simplify the deployment and management operations of the Kubernetes infrastructure.

Amazon takes care of managing the underlying Kubernetes control plane that includes Kubernetes nodes and etcd nodes while users and operators are responsible for the Kubernetes nodes.

Kubernetes administrators do not have control over the Amazon control plane resources. Amazon is responsible for provisioning, managing, and scaling the underlying infrastructure up and down to accommodate cluster needs and performance.

Deployment workflow

When you deploy a Kubernetes cluster by using KaaS, KaaS creates the following items:

- A VPC on which KaaS deploys Kubernetes master nodes. All underlying infrastructure is highly-available and managed by Amazon. KaaS deploys one VPC per EKS cluster.

- A highly-available Kubernetes control plane that includes master nodes and etcd nodes. Because Amazon handles the control panel management, you see only the worker nodes when you run

kaasctl get nodes. - Kubernetes worker nodes based on the default EKS-optimized Amazon Machine Images (AMI). Support for custom AMIs will be added in future versions.

- Security groups that supervise cluster communication with VPC, worker nodes, and Elastic Load Balancing (ELB) service.

- Kubernetes subnetworks. By default, KaaS deploys two fully routable subnetworks, a routing table that is associated with these subnetworks, and an Internet gateway.

- A role that enables Kubernetes to create resources in Amazon EC2. The role is added to the Kubernetes role-based access control (RBAC) table as admin.

- Other standard Amazon services, such as Elastic Container Registry (ECR).

Networking

EKS network topology is tightly integrated with VPC. EKS uses a Container Network Interface (CNI) plug-in which integrates the Kubernetes network with the VPC network.

Kubernetes pods have internal IP addresses that they use to connect to the Elastic Network Interface (ENI). These IP addresses belong to the subnet in which worker nodes are deployed. These pod IP addresses are fully routable within the VPC network. All VPC network policies and security groups apply to these IP addresses.

On each EC2 instance, Kubernetes runs a DaemonSet that hosts the CNI plug-in. The CNI communicates with the network local control plane that maintains a pool of IP addresses. When kubelet schedules a pod, it access the pool of IP addresses, and the CNI assigns an IP address that gets to the pod.

In Amazon EKS deployments, CoreDNS is used by default for all Kubernetes clusters version 1.11 or later.

When you create a LoadBalancer service type in Kubernetes, an ELB is created in AWS.

Storage

Amazon EKS uses the Elastic Block Storage (EBS) to store persistent data. Amazon automatically replicates EBS volumes in separate availability zones within one region to ensure data resilience. By default, when you create a Persistent Volume Claim (PVC), Kubernetes requests a volume in EBS.

High availability

To ensure high availability, Amazon deploys VPC nodes, Kubernetes nodes and storage resources across multiple availability zones (AZ) and regions. Each region represents a data center located in different parts of the world and each AZ is a physical location within that region. Although you can deploy Kubernetes in a limited number of regions, your resources are automatically placed in separate AZs to ensure redundancy. You need to specify the region in the provider configuration file for your EKS cluster deployment, such as us-east-1.

For more information about the supported regions and availability zones for Amazon EKS, see Region Table and AWS Regions and Endpoints.

Managed services

KaaS aims to deploy all managed services described in the KaaS overview in your Amazon EKS cluster. However, the deployment functionality for some of the services is currently work in progress.

For disaster recovery purposes, VMware® Velero stores backups in the Amazon S3 object store buckets.

Upgrades

Amazon handles upgrades of the Kubernetes control plane and the underlying VPC components.

KaaS is responsible for upgrading kaasctl and all the managed services components.

OpenStack Kubernetes overview

Rackspace Kubernetes-as-a-Service (KaaS) enables deployment engineers to deploy Kubernetes® and Rackspace Managed Services on top of supported cloud platforms, such as Rackspace Private Cloud Powered by OpenStack (RPCO) and Rackspace Private Cloud Powered by RedHat® (RPCR).

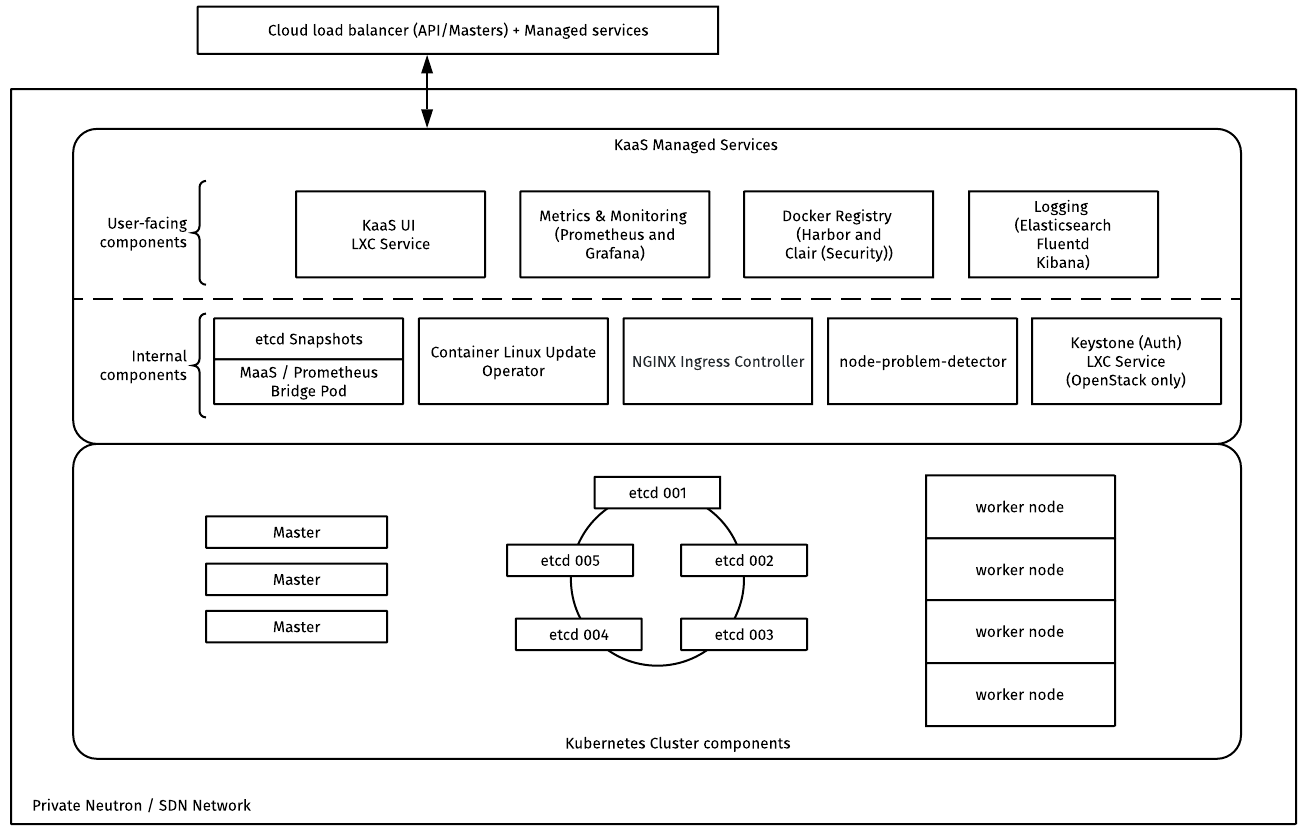

The following diagram provides a simplified overview of Rackspace KaaS deployed on an OpenStack cloud provider:

Networking

KaaS networking varies by cloud provider. For example, in OpenStack deployments KaaS uses neutron for private network.

DNS

KaaS requires a Domain Name Service (DNS) server configured on the cloud provider side to handle Kubernetes node name resolution and redirect requests between the cloud provider and the Kubernetes nodes.

In OpenStack deployments, KaaS communicates through OpenStack DNS-as-a-Service (designate) that provides REST APIs for record management, as well as integrates with the OpenStack Identity service (keystone). When KaaS creates Kubernetes nodes, it adds DNS records about the nodes in the designate DNS zone. Verify that designate is configured before you deploy Kubernetes.

External load balancers

External load balancers ensure even user workload distribution among Kubernetes worker nodes. KaaS requires an external load balancer to be configured for your deployment. For OpenStack deployments, KaaS uses OpenStack Load Balancing as a Service (octavia).

When you create a service, you can specify the type:LoadBalancer in the service configuration file to enable load balancing for that application.

OpenStack network diagrams

The following diagram describes a production architecture that uses OpenStack Networking service (neutron), a private network and floating IPs for master nodes:

The following diagram describes an extended production architecture that uses OpenStack Networking service (neutron) and Load Balancer as a Service (LBaaS):

Networking inside Kubernetes

User applications run in Kubernetes pods. Each pod runs one instance of an application. Kubernetes does not provide out-of-box support for networking between pods. Therefore, a Kubernetes provider can select its own network solution by using a CNI plug-in. KaaS implements Calico® as a networking solution for Kubernetes. One of the main advantages of Calico is networking policies that enable network access control between Kubernetes pods.

Storage

KaaS relies on block storage as a service in all supported cloud providers.

In OpenStack, KaaS uses Ceph to ensure high availability (HA) for cinder volumes that are not HA by default. KaaS might support third-party solutions through the deal exception approval process.

In Amazon EKS deployments, KaaS uses Amazon Elastic Block Store (Amazon EBS).

PersistentVolumes and PersistentVolumeClaims

A Persistent Volume (PV) is network storage that has been provisioned in the cluster either statically by a person, or dynamically by a storage class. A Persistent Volume Claim (PVC) is a request for storage that a user creates. The Controller Manager is a component of the control panel that manages all the reconciliation loops in a Kubernetes cluster.

The Persistent Volume Controller is a series of reconciliation loops that continually monitor the current state of PV and PVCs and attempt to get them to the desired state.

PV and PVC relationship

There is a one-to-one mapping between PV and PVC. If multiple pods need to access the same data, they need to share the same PVC.

When a user creates a PVC, the Persistent Volume Controller initiates the following algorithm:

- If the PVC manifest references a specific PV name, the Persistent Volume Controller attempts to retrieve the referenced PV.

- If the Persistent Volume Controller cannot find the PV, it continues to wait indefinitely in anticipation that the administrator provisions the PV manually.

- If the Persistent Volume Controller finds that the PV does not have current PVCs bound to it, it binds the PV to the original PVC.

- If the Persistent Volume Controller finds that the PV is already bound to another PVC, it returns an error.

- If the PVC manifest does not reference a specific PV name, the user does not require a specific PV. Therefore, the Persistent Volume Controller performs the following steps:

- The Persistent Volume Controller provisions a PV dynamically by using the defined storage class.

- The Persistent Volume Controller reconciliation loop continuously tries to find a PV that is either prebound or dynamically provisioned for the PVC. It filters out the PVs that are already bound to other PVCs, that have mismatching labels, or that belong to a different storage class.

- When the Persistent Volume Controller finds a suitable PV, it binds the PVC to it.

NOTE: This logic is built into the PersistentVolume controller that is part of the Controller Manager and is consistent across _all_ storage classes.

Reclaim policies

Reconciliation loops continuously check the state of PVs. If the Persistent Volume Controller detects a PV with a missing PVC, for example, the PVC is deleted, and the Controller reclaims the PV.

Therefore, when a pod is deleted, the PVC remains in effect, because the lifecycle of PV and PVCs are independent of pods. A PVC needs to be deleted when data needs to be destroyed.

The following PV’s reclaim policies define what happens with PVs after a pod is destroyed:

- Retain: The Persistent Volume Controller does not perform any operations with the data. The data is retained.

- Recycle: The Persistent Volume Controller performs a basic scrub by executing the

rm -rf /vol/*script.

OpenStack tenancy model

Because Kubernetes clusters provide access to the underlying OpenStack services, a tenancy model is an integral part of security for Rackspace Kubernetes-as-a-Service (KaaS).

KaaS uses the following tenancy workflow:

- KaaS creates a service account (tenant) that owns the Kubernetes cluster and all additional items such as cinder volumes, load balancers, and so on. This account mitigates the risk associated with employee termination as a result of cluster deletion.

- Additionally, the service account buffer allows rational secret management. Resources that the cluster creates for itself fall under the service account for ease of debugging and management.

- The

keystone-authauthentication shim system supports the OpenStack Identity service (keystone) authentication. Therefore, if a valid keystone user that is authorized to use the cluster logs in, they can use their keystone credentials. - Additionally, because the cluster or clusters are not under a tenant account, operators can apply Security Contexts®, AppArmor®, and other rules on pods and the cluster or clusters that cannot be overridden by other users. If an organization requires ‘no root containers’ for users, then operators can restrict access by submitting a request.

Managed Services

KaaS Managed Services provide logging, monitoring, disaster recovery, and other features for Kubernees clusters. The services leverage open-source infrastructure tools to give users and operators full visibility on what is happening inside the Kubernetes cluster and the underlying cloud platform.

For a high-level overview, see the Customer documentation.

Kubernetes-auth

Kubernetes-auth is an authentication API used by Kubernetes to outsource authentication. This service sits between Kubernetes and an identity provider, such as keystone, allowing Kubernetes users to use bearer tokens to authenticate against Kubernetes. These bearer tokens are tied back to cloud provider user accounts through kubernetes-auth but prevents cloud provider credentials from going directly through Kubernetes.

For more information, see Kubernetes Auth.

KaaS Control Panel

The KaaS Control Panel is the user interface (UI) that runs in the environment and enables Kubernetes users to log in, manage cluster resources, and access the Kubernetes clusters.

For more information, see KaaS Control Panel

OAuth Proxy

KaaS Managed Services use multiple user interfaces to provide logging, monitoring, container registry, and other functionality. Each UI requires user login. To eliminate the need for repeatedly logging in to all the UIs, KaaS uses an authentication proxy.

KaaS uses OAuth2 Proxy for authorizing users to use the KaaS Control Panel and provides Single Sign-On (SSO) functionality. With OAuth2 Proxy, users sign in to the KaaS Control Panel once and then can access all associated UIs while HTTP session is valid.

Clusters

A Kuberntes cluster that KaaS creates consists of the following main parts:

- Master nodes, or a control plane, that orchestrate application workloads. Master nodes run etcd, kube-apiserver, kube-scheduler, and so on.

- Worker nodes, or nodes, that run your applications. Node components include

kubelet,kube-proxy, and a container runtime that is responsible for running containers. KaaS uses the rkt container engine.

Kubernetes nodes run on top of the virtual machines that KaaS creates on the underlying cloud provider during the cluster deployment.

This diagram gives a high-level overview of a Kubernetes cluster deployed by KaaS:

KaaS uses all the main upstream Kubernetes concepts. This section only outlines KaaS specifics, and all upstream Kubernetes concepts apply. If you are completely new to Kubernetes, see Kubernetes Basics.

Pods

User applications run in Kubernetes pods. Each pod runs one instance of an application and one container. KaaS does not deploy multiple containers per pod. For security reasons, pods cannot run as root or as privileged and use host networking or root namespaces. If you need to enable access to the outside infrastructure for some of your pods, you can do so by configuring Pod Security Policies (PSP).

Namespaces

A Kubernetes namespace enables multiple teams of users to work in the same Kubernetes environment while being isolated from each other.

By default, KaaS creates the following namespaces for a Kubernetes cluster:

- default

- kube-public

- kube-system

- rackspace-system

- ingress-nginx (Amazon EKS only)

The default, kube-public, kube-system namespaces are default Kubernetes namespaces. KaaS creates them during the cluster deployment. If you create a Kubernetes object without specifying a namespace, KaaS creates it in the default namespace.

Rackspace Managed Services run in the rackspace-system namespace. KaaS creates this namespace when you deploy managed services. This namespace is specific to the KaaS deployment.

In Amazon EKS deployments, KaaS deploys the nginx ingress controller in the ingress-nginx namespace. In the OpenStack deployments, KaaS deploys nginx in the rackspace-system namespace.

Tooling

KaaS uses the kaasctl (pronounce ‘kas-cuttle) management tool that enables deployment and lifecycle management operations for your Kubernetes cluster. kaasctl leverages Kubespray and HashiCorp™ Terraform™ to deploy highly-available Kubernetes clusters.

Kubespray is an automation tool that enables Kubernetes deployment in the cloud or on-premise. The main advantages of Kubespray include high availability support, network plug-ins of choice, and support for most of the enterprise cloud platforms. Kubespray is incorporated as a submodule in the mk8s.

While Kubespray is responsible for deployment, it does not work without Terraform. Terraform provides functionality for creating configuration files for Kubernetes deployments. Kubespray uses the Terraform configuration template for the selected cloud provider to create a configuration file is stored in the terraform.tfvars file in your cluster directory.

kaasctl combines these two tools, as well as provides a command-line interface (CLI) for running lifecycle management operations.

kaasctl runs in a container that is stored in the Rackspace Quay.io container registry. For testing purposes, you can use the latest kaasctl image. For production environments, use a stable version of kaasctl.

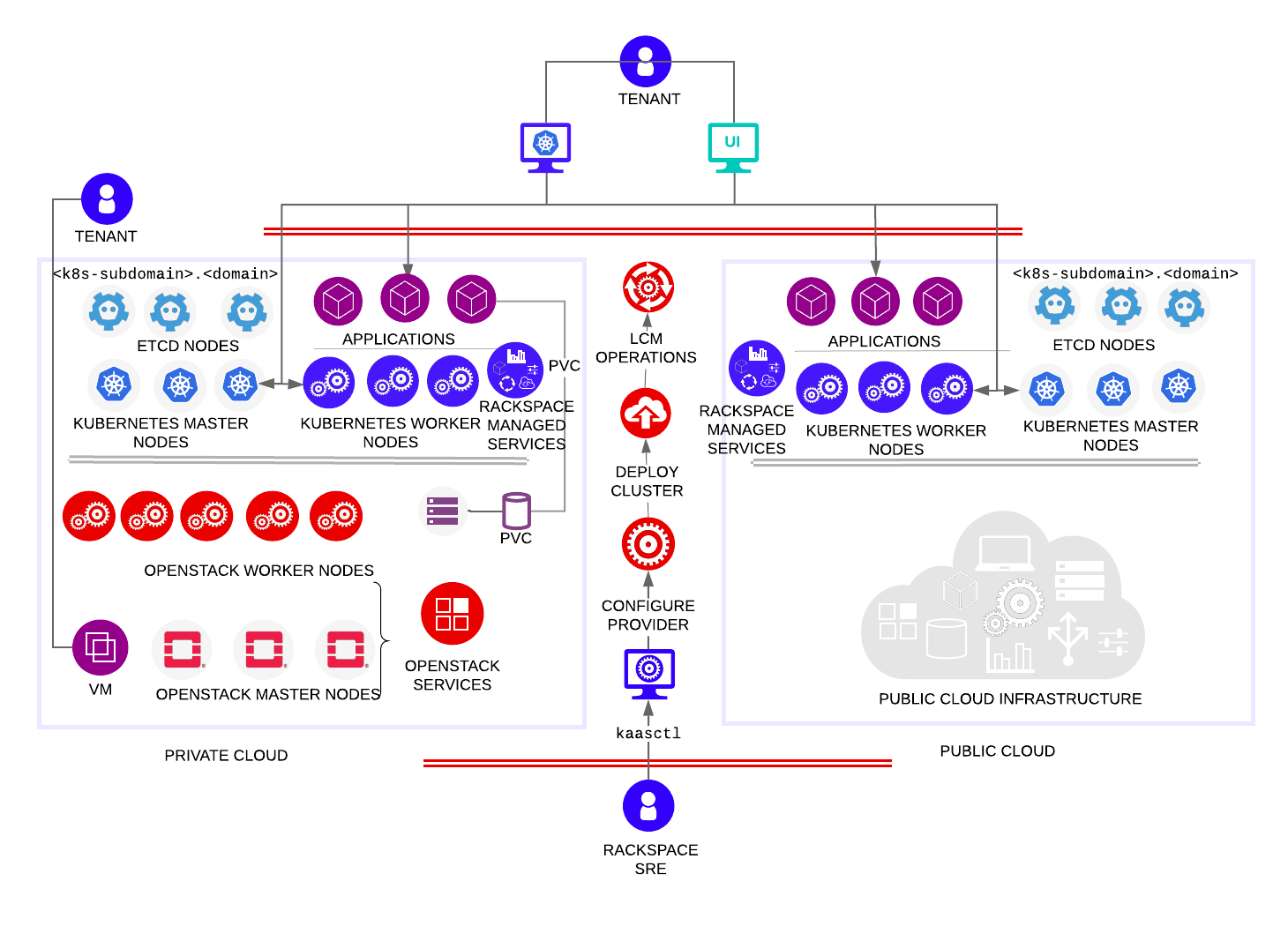

The following diagram shows kaasctl lifecycle management operations:

kaasctl performs the following operations:

- Preconfigures the cloud provider.

- Deploys a Kubernetes cluster.

- Deploys KaaS Managed Services.

- Validates the cluster deployment.

- Destroys a Kubernetes cluster and all the associated resources.

- Updates a Kubernetes cluster and KaaS components.

- Adds, removes, and replaces Kubernetes cluster nodes.

- Displays versions of Kubernetes and related components.

Bootstrap file

kaasctl uses a bootstrap.yaml configuration file to obtain the information about the cloud on which it needs to deploy the Kubernetes cluster. For each cloud provider, the bootstrap file has a specific configuration. All bootstrap files are stored securely under the Managed Kubernetes project in PasswordSafe. You must have permissions to access PasswordSafe.

Authentication and authorization

This section describes the authentication (AuthN) and authorization (AuthZ) aspects of KaaS.

Authentication is responsible for determining and verifying the identity of the user, while authorization determines the set of permissions that an authenticated user has.

Cluster admin ClusterRoleBinding

By default, Kubernetes role-based access control (RBAC) configuration is very restrictive and denies authorization to any users without a matching role binding. To allow an initial set of users to administer the cluster, operators must create a ClusterRoleBinding that matches users against a specific criterion and grants those users an administrative role. Typically, the initial set of users includes cluster administrators.

Matching users

Users’ identity information comes from kubernetes-auth, which derives its information from an identity provider, such as keystone. When Kubernetes communicates with kubernetes-auth to authenticate a user, it receives a username, a user ID, and a list of IDs for the roles to which the user is assigned. The most flexible piece of information to use for defining RBAC rules is one of the role IDs.

Granting a cluster-admin role

By default, Kubernetes includes the cluster-admin ClusterRole that enables users to create and delete all resource types in all namespaces, including RBAC resources. By granting the cluster-admin role to the desired users, KaaS ensures that they can modify resources on the cluster, as well as provision further access to the cluster for additional users as needed.

ClusterRoleBinding prerequisites

You can assign a role to any users that can administer the cluster.

NOTE: In OpenStack environments, an administrator must create this role before cluster deployment.

ClusterRoleBinding payload

The cluster-admin-rolebinding.yml manifest creates a ClusterRoleBinding that grants the cluster-admin ClusterRole to any user who is assigned the role described in the ClusterRoleBinding prerequisites section.

Updated 5 months ago